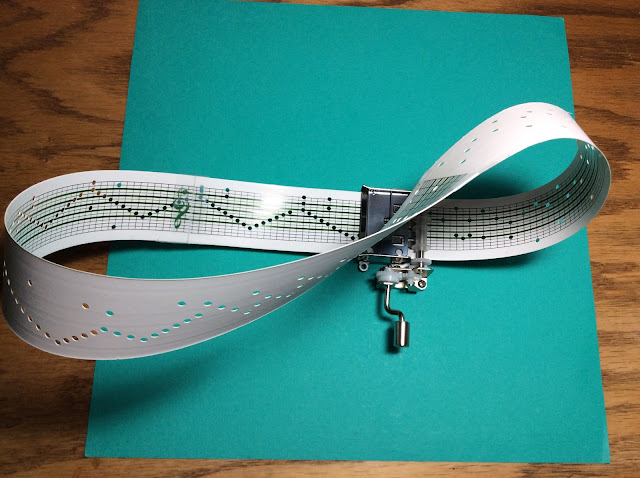

Möbius-ly

We live in a world where the idée fixe du jour is Artificial Intelligence and its potential to affect our existence as sentient occupiers of the space we choose to perceive, or maybe interpret [Plato's Cave?], as the world; so, it is perhaps worth reflecting on our sentience and consequent perception in the light of, for want of a better phrase, 'the competition'. Yesterday, I posted on Strange Loops, having only alighted on both the phrase and the concept yesterday morning, courtesy of the BBC, gawd bless 'er. As I said, my signal failure over forty-plus years, to get to grips with what Douglas Hofstadter was getting at in "Gödel, Escher, Bach..." was suddenly thrown into relief - in many senses of the word - by what I heard in the discussion mentioned.

After I had written last night's scribble, I wrote down a phrase that immediately sprang to my mind: 'Strange Loops and Language Acquisition?'. Having studied postgraduate linguistics for a year under the then overarching theories and groupthink of Chomskian deep structure and transformational grammar, and having left with the sour taste in my mouth that the prevailing orthodoxy was probably completely fallacious, there was something in the concept of Strange Loops that appealed deeply. After waking late this morning, and making tea, I Googled the very phrase I'd jotted down last night. Turns out that, indeed, an awful lot of people out there are turning their thoughts to just that very area of study.

The mistake that Chomsky made was to posit an ill-formed and naive idea as axiomatic to his theory, from the start. The statement that any natural language and its speakers are capable of constructing an infinite number of well-formed sentences within that language, and that to learn all of those by rote and imitation would simply be impossible, is, prima facie, quite plausible. So he proposed that there must be some kind of innate proto-linguistic 'structure' sitting deep within us, hard-wired into the human brain by heredity. This notion of course caused widespread disquiet among those on the Left back in the day, who would have none of it because of the countervailing ideological stance that all human behaviour is taught and learned. The idea here being that historically, inherited characteristics were used to define and rank one section of humanity over another: the success of one cohort over the other seen as 'the natural order'.

The thing is that Chomsky's theory of syntax never held up to scrutiny over time, in its own terms, anyway: at every step of the way, the damned thing got more and more complex, as increasing numbers of exceptions that would not fit the 'generated' rules had to be 'filtered' by subsets of rules, which fanned out in the exact opposite fashion that original theory posited: rather than explain, the rules were dominated by the wayward particularities of the languages themselves. In short, the rulebook proved to be infinite in itself, and therefore essentially ineffable. Now, the curious thing about Large Language Model AI, is that it deliberately goes in the opposite direction: brute force and ignorance. Give it shedloads of available data and bugger-all in the way of rule-sets, apart from the simple ability to recursively combine and test those data, and you have a system that can 'learn'. It's a bit like chess: there's an astronomically enormous number of possible moves that can be made in a game, but most of them are garbage, and have been finitely culled as such by generations of chess players, and latterly the software engines that can play the game to the highest degree imaginable.

And here lies the crux and substance of it all: raw data is everywhere, but those atomic sub-entities have no inherent sense in and of themselves, until they are combined into information: the very stuff of understanding. The process of forming information out of data is inherently recursive, and recursion is at the heart of everything we perceive as reality: the process of thought is itself recursive: our brains have the ability to alter themselves through the act of thinking itself. The plasticity of thought is its principle character: our software is infinitely reconfigurable by its very nature. We enter existence mentally as a blank slate - genome aside, but that's another thing altogether - and develop our awareness, our knowledge and our language through an iterative process of continuous self-regeneration, looping back into our thoughts and memories and updating, amending and editing our consciousness on a continual basis. We are indeed Strange Loops; as, I would say, is AI: and the mistakes that AI will inevitably make will also, inevitably, be exactly similar to the ones that our erstwhile unique human intelligence has always made. The apple never falls far from the tree...

Chomski=Crooks Correction factors. TOO many of them; you know summat's wrong. How many variations of Plank's constant are in use?

ReplyDeleteATB

Joe